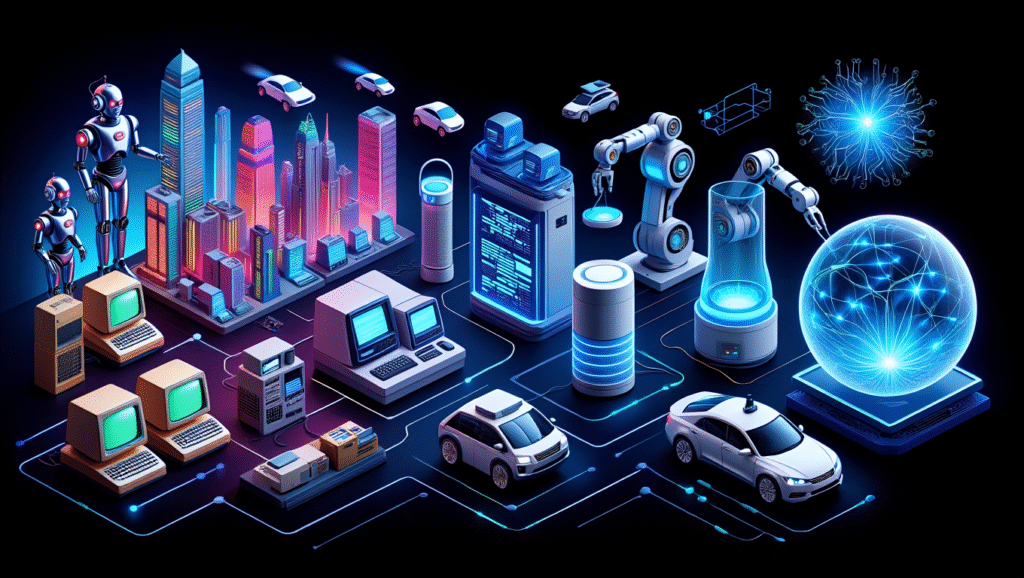

Artificial Intelligence (AI) has come a long way from being a spark of imagination in science fiction novels to powering the devices in our pockets. What was once a far-fetched dream of thinking machines is now a reality that shapes how we work, communicate, and live. In this blog post, we’ll take a journey through the evolution of AI, from its early roots in philosophy and fiction to its transformative role in today’s world, all explained in a way that feels relatable and real.

The Seeds of AI: Early Ideas and Sci-Fi Visions

The notion of machines that could think like humans has exhilarated and spooked people for more than 200 years. Long before computers, philosophers, including René Descartes in the 1600s, wrestled with questions of whether a machine could ever think like a human. But it was in science fiction that AI really came to life in the public imagination. Robots, artificial beings with humanlike intelligence, appeared in that universal history in the 1920s, when the Czech play R.U.R., by Karel Čapek, introduced the word. By the 1950s, Isaac Asimov’s tales of robots programmed with ethical codes and movies like Forbidden Planet had begun to sketch out images of intelligent machines, cultivating a fascination with what might be achievable.

These early dreams weren’t just entertainment — they dared scientists to ask, “Could we actually build something like this?” It teed up A.I. to transition from the realm of fiction to the world of fact.

The Birth of AI: The 1950s and 1960s

Computer scientist John McCarthy coined the term “Artificial Intelligence” in 1956 at the Dartmouth College conference. This was the formal beginning of AI as a field of study. Early researchers believed that they would build machines that would replicate human reasoning, and they got to work on creating programs to solve problems such as a game of chess and mathematical theorems.

One of the first advances was the Logic Theorist, developed by Herbert Simon and Allen Newell in 1955. The Logic Theorist could prove mathematical theorems which made it like a digital mathematician. By the 1960’s the General Problem Solver and the first chatbots ,such as ELIZA suggested that computers were capable of completing tasks with a degree of intelligence. These systems were simple, based on rigid rules and they could not learn or adapt like humans. There was excitement and AI research had begun.

The Rollercoaster Years: 1970s to 1990s

The development of AI has certainly not been a linear journey. The 1970s and 1980s saw significant setbacks in the form of what became known as “AI winters” – when the funding dried up due to advances not being realized at the same speed of expectations. Specifically, early AI was often slow, cumbersome, and required inordinate amounts of computer processing power, alongside the inability to account for the limits of any “real-world” complexity. Indeed, teaching a computer to comprehend a simple sentence was a monumental task amongst many.

There were, however, ‘high points.’ In the 1980s, ‘expert systems’ took on a life of their own. These AI programs were to emulate knowing individuals in their chosen subject matter – whether that was medical, engineering, etc. There was MYCIN, which operated by interpreting an infection’s symptoms and arrived at a diagnosis as well as a doctor could, through a series of complicated rule sets. At the same time, AI was entering popular culture, with movies like The Terminator or Wargames being produced during this low point of expectations, and continued to underscore the applications of AI while researchers awaited progress.

By the 1990s, a significant development, known as machine learning, was introduced. In contrast to deploying a variety of rules, researchers and scientists began to realize that computers could learn from their own data. Finally, during this decade, in 1997, IBM’s Deep Blue defeated World Chess Champion Garry Kasparov, and the rest is history. Although Deep Blue was not engaging in intelligent or human-like thinking, this feat marked a significant turning point for AI.

The Modern AI Boom: 2000s to Today

The 21st century has been AI’s golden era, driven by three big factors: massive amounts of data, powerful computers, and smarter algorithms. Here’s how it unfolded:

- Big Data: The internet explosion meant we suddenly had billions of photos, texts, and videos to feed AI systems. More data meant better learning.

- Computing Power: Advances in hardware, like GPUs (graphics processing units), gave AI the muscle to crunch numbers at lightning speed.

- New Algorithms: A technique called deep learning, inspired by the human brain, took AI to new heights. Deep learning uses neural networks—layers of digital “neurons”—to spot patterns in data, like recognizing faces or translating languages.

In 2011, IBM’s Watson wowed the world by winning Jeopardy!, showing AI could handle natural language and trivia with flair. By 2016, Google’s AlphaGo beat a world champion at Go, a game far more complex than chess, proving AI could tackle problems even humans struggled with.

Today, AI is everywhere. Your phone’s voice assistant, like Siri or Google Assistant, understands your speech thanks to natural language processing. Recommendation algorithms on Netflix and Spotify know your tastes better than some of your friends. Self-driving cars, like those from Tesla, use AI to navigate roads. Even healthcare is transforming—AI helps doctors read medical scans or predict diseases faster than ever.

AI’s Real-World Impact

AI has developed to the point where it is now a quiet companion in our daily lives. When you shop online, AI suggests other things for you based on what you have been looking at. When you scroll through social media, AI curates your entire feed. In the business world, AI is being used to enhance everything from supply chains to energy grids. AI is even saving lives, like the AI tools that can identify early signs of cancer from X-rays or offer doctors an analysis of your risk of a heart attack based on your wearable data.

However, this story is not all positive. AI presents difficult questions. Who owns the data it relies on? Can it be biased? (Spoiler: Yes, it can if the data it is trained on is biased.) And, jobs? The million-dollar question is whether AI will displace jobs or create new ones. These societal challenges are still evolving.

What’s Next for AI?

The journey of AI is just beginning, and researchers are working on general AI that is able to think and adapt like humans do in any task. We are not there yet, but we are making advances in many areas, including quantum computing and ethical AI design. Wouldn’t it be amazing to have AI that can write novels, diagnose rare diseases, or even help fight climate change by modeling complex systems?

Meanwhile, popular films such as Ex Machina or Her remind us of our responsibilities in potentially creating machines that may one day think or feel the way we do. The line is becoming blurred between dream and reality, and we will need to be responsible for the future of AI.

Final Thoughts

Whether you’re pondering philosophically, dreaming of sci-fi, or using the technology in your own pocket, the story of AI includes a long history of human curiosity and ingenuity. What began as a radical idea—machines that think—has evolved into a powerful utility capable of reshaping our world. Whether it’s improving your commute to work or enabling scientists to unlock new discoveries, AI is no longer merely speculative; it is a new reality in our lives.

So the next time you ask your phone for directions or binge a show that was recommended to you, consider how far AI has come. It’s not just technology, it’s a story of imagination transforming into reality, and we all have a part in its next chapter.